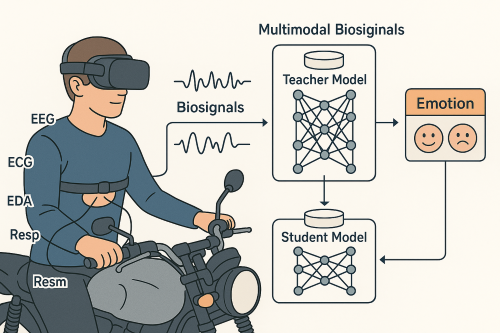

Multimodal affect recognition (in collaboration with Yamaha Motor Company)

Estimating valence and arousal using multimodal physiological signals

Estimating valence and arousal using multimodal physiological signals

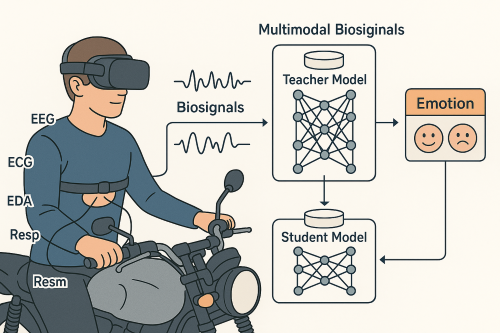

Individuals background and smile sychrony in group activity

Reverse-engineering mechanism of coordination

A child-friendly system using behavioral data and interpretable AI to help detect early signs of developmental disorders.

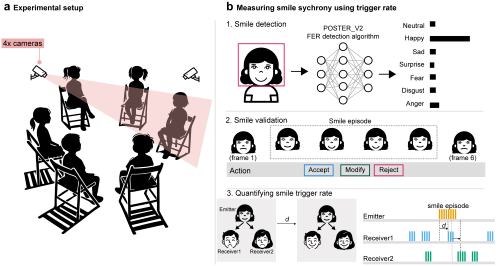

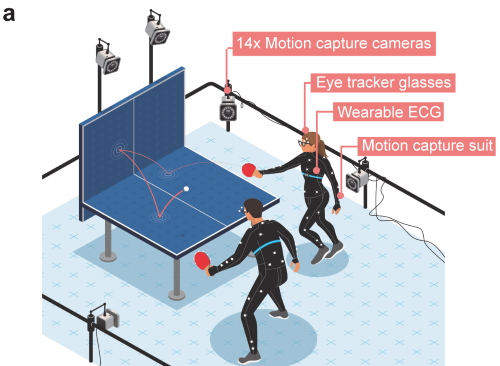

Examining the factors behind coordination in a competitive game

Go/NoGo game equipped with eye tracker

Published in ICAICTA 2014, 2014

Emotion recognition using extreme learning machines.

Recommended citation: Utama, P., & Ajie, H. (2014). Emotion recognition using extreme learning machine. *ICAICTA 2014*.

Published in CoDIT 2018, 2018

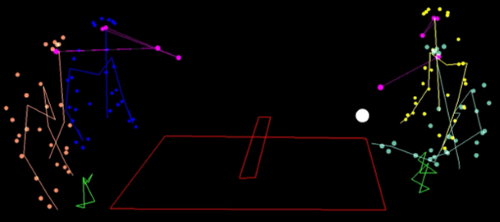

A markerless human activity recognition approach using multi-camera inputs.

Recommended citation: Putra, P.U., Shima, K., & Shimatani, K. (2018). Markerless human activity recognition using multiple cameras. *CoDIT 2018*.

Published in 生体医工学 2019, 2019

Evaluation of children’s inattention and impulsivity under external stimuli.

Recommended citation: 島谷康司, 発智さやか, 坂田茉実, 三谷良真, 島圭介, Putra, P. (2019). 幼児の不注意と衝動性の評価. *生体医工学*.

Published in IEEE CBMS 2020, 2020

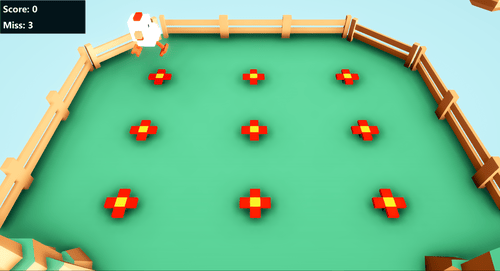

A serious Go/NoGo-task game to estimate inattentiveness and impulsivity.

Recommended citation: Putra, P., Shima, K., & Shimatani, K. (2020). Catchicken. *IEEE CBMS 2020*.

Published in RoMeC 2021, 2021

Driver emotion estimation using multimodal physiological data.

Recommended citation: 西原翼, 島圭介, 神谷昭勝, 南重信, 井上真一, 小池美和, Putra, P. (2021). ドライバ感情推定システム. *RoMeC 2021*.

Published in Scientific Reports 11(1), 2021

Gaze and behavioral markers for ASD detection using a Go/NoGo game.

Recommended citation: Putra, P.U., Shima, K., Alvarez, S.A., & Shimatani, K. (2021). Identifying ASD symptoms using the CatChicken Go/NoGo game. *Scientific Reports*, 11, 22012.

Published in ICCAS 2021, 2021

A markerless system for evaluating developmental disorder symptoms.

Recommended citation: Putra, P.U., Shima, K., Hotchi, S., & Shimatani, K. (2021). Markerless behavior monitoring system. *ICCAS 2021*.

Published in IEICE Conference Archives, 2022

Markerless infant behaviour evaluation using spatial memory.

Recommended citation: 原和江, 坂田茉実, 島圭介, 島谷康司, Putra, P. (2022). 空間メモリを用いた乳幼児行動評価. *IEICE*.

Published in PLoS ONE 17(1), 2022

A deep learning model for recognizing human activities from multiple camera views.

Recommended citation: Putra, P.U., Shima, K., & Shimatani, K. (2022). A deep neural network model for multi-view human activity recognition. *PLoS ONE*, 17(1), e0262181.

Published in 23rd SICE System Integration Division Conference (SICE SI 2022), CD-ROM, 2022

A Go/NoGo game–based system for evaluating children’s attention function using the CatChicken task.

Recommended citation: Yusei, D., Sakata, M., Mikami, H., Putra Utama Prasetia, Shima, K., & Shimatani, K. (2022). Children’s Attention Function Evaluation System by Go/NoGo Game CatChicken (Go/NoGoゲームCatChickenによる児の注意機能評価システム). *23rd SICE System Integration Division Conference (SICE SI 2022)*, ROMBUNNO.2A2-E11.

Published in ETRA 2024, 2024

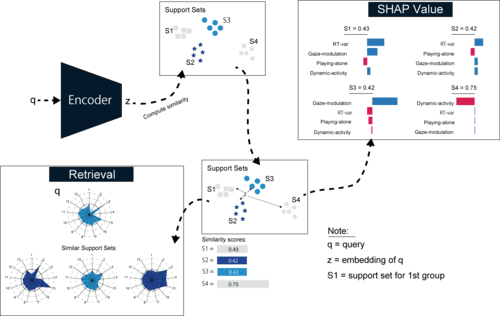

A fine-scale analysis of anticipatory eye movements using SHAP.

Recommended citation: Putra, P.U., & Kano, F. (2024). Anticipatory eye movement and SHAP analysis. *ETRA 2024*.

Published in OSF Preprint, 2025

A study of predictive alignment and coordination using multimodal sensing.

Recommended citation: Putra, P.U., & Kano, F. (2025). Alignment in Action Anticipation. *OSF Preprint*.

Published in Methods in Ecology and Evolution 16(4), 2025

A flexible YOLO-based framework for automated animal behaviour quantification.

Recommended citation: Chan, A.H.H., Putra, P., Schupp, H., Köchling, J., Straßheim, J., Renner, B., et al. (2025). YOLO-Behaviour. *Methods in Ecology and Evolution*, 16(4), 760–774.

Published:

In this talk, I gave a brief introduction to social signal processing and explained how it can benefit researchers studying human behavior and clinical psychology more broadly.

Published:

This study aims to investigate how humans coordinate and perceive actions in a dynamic ball interception task. In this task, two individuals must continuously coordinate their actions to keep bouncing a table tennis ball towards the wall. We tracked the body and eye movements of individuals to analyze their coordination. From body and eye movement data, we extracted eye-movement and action features, such as anticipatory looks, ball pursuit duration, and kinematic energy of racket. To understand individuals’ movement patterns, the Lyapunov spectrum of their racket movement was analyzed. In addition, a combination of a Hidden Markov Model and action features was employed to identify the transition from stable to semi-stable coordination states. Our preliminary findings suggested that participants’ racket movements showed chaotic behavior in both short and long coordination sequences. This behavior may result from their attempts to compensate for their partner’s actions or their own errors. We also observed significant differences in eye and body movements when transitioning from stable to semi-stable coordination. In the semi-stable state, the duration of pursuit became shorter, and the movement of the racket became more irregular compared to the stable state. Overall, our study offers a quantitative framework for understanding the dynamics of human movement and perception during realistic interception tasks.

Published:

Here, I presented practical guidance on how to use deep-learning–based facial expression recognition models. In the talk, I explained to the community which aspects of these models are useful and what their limitations are. I also highlighted factors they should be aware of, such as the lateral position of the face in the image and the demographic background of participants whose facial expressions are being analyzed automatically.

Published:

Humans have coordinated with one another, animals, and machines for centuries, yet the mechanisms that enable seamless collaboration without extensive training remain poorly understood. Previous research on human-human and human-agent coordination—often relying on simplified paradigms—has identified variables such as action prediction, social traits, and action initiation as key contributors to successful coordination. However, how these factors interact and influence coordination success in ecologically valid settings remains unclear. In this study, we reverse-engineered the coordination process in a naturalistic, turn-taking table tennis task while controlling for individual skill levels. We found that well-calibrated internal models—reflected in individuals’ ability to predict their own actions—strongly predict coordination success, even without prior extensive training. Using multimodal tracking of eye and body movements combined with machine learning, we demonstrate that dyads with similarly accurate self-prediction abilities coordinated more effectively than those with lower or less similar predictive skills. These well-calibrated individuals were also better at anticipating the timing of their partners’ actions and relied less on visual feedback to initiate their own, enabling more proactive rather than reactive responses. These findings support motor control theories, suggesting that internal models used for individual actions can extend to social interactions. This study introduces a data-driven framework for understanding joint action, with practical implications for designing collaborative robots and training systems that promote proactive control. More broadly, our approach—combining ecologically valid tasks with computational modeling—offers a blueprint for investigating complex social interactions in domains such as sports, robotics, and rehabilitation.

Undergraduate course, University 1, Department, 2014

This is a description of a teaching experience. You can use markdown like any other post.

Workshop, University 1, Department, 2015

This is a description of a teaching experience. You can use markdown like any other post.